Our most frequent weather/climate questions often reflect different time frames. What will the weather be tomorrow or next week? What kind of summer (or winter) will we have? What is our climate future? I will try to show that there are good reasons why we can’t do the first two very well and equally good reasons why we CAN do the third.

This essay addresses the daily-to-weekly forecast and pits artificial intelligence (it’s everywhere!) against chaos. A battle of titans.

Predicting Tomorrow’s Weather

The physical processes that cause weather to happen are well known. The first “primitive equations” describing the forces that drive major air currents and the movement of storms were derived by Vilhelm Bjerknes in the early 1900s.

While additions have been made to those first equations, limitations on the accuracy of forecasts has always had more to do with the frequency and spatial density of measurements of the atmosphere and surface, and to limitations on computer power, than with the basic science. As both measurements and computational power have multiplied exponentially over the last few decades the accuracy of forecasting has increased as well.

As has the complexity of the process used to derive that forecast. In The Weather Machine, Andrew Blum describes in detail the 350-person operation of the European Center for Medium-Range Weather Forecasting (ECMWF) that ingests billions of bits of information continuously from weather stations and satellites to produce a global weather forecast at a fine spatial scale every 12 hours.

How fine is that scale?

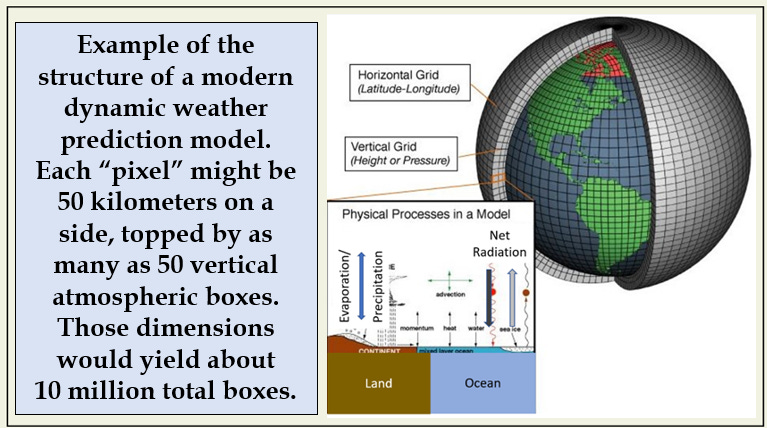

We want a forecast that fits our location, not a general regional view, so modern dynamic forecast models operate with about a 50 km (30 mile) spatial resolution on the ground. A square 50 km on a side would cover 2,500 km2, an area about the size of Luxembourg or the state of Rhode Island. Divide that into about 500 million km2 as the surface area of the Earth, and you get about 200,000 squares!

But, as vertical movement of air packets in the atmosphere are crucial to understanding and predicting weather, a model might stack as many as 50 layers of atmosphere above each pixel on the ground. With 200,000 locations and 50 atmospheric layers over each, you are now at 10 million atmospheric boxes. The actual size of boxes varies with location and height in the atmosphere, and changes with different models, but you get the picture.

Air moves quickly through such small atmospheric boxes, so the state of each box has to be recalculated frequently – about every 10 minutes or 6 times per modeled hour - 144 times per day. With additional variables added to the basic primitive equations the number of calculations gets very hard to imagine, but with 10 million boxes modeled 144 times per day, even a small number of equations means tens of billions of calculations per day – or more.

How Accurate Can Models Be At This Scale?

Unlike financial pundits, sports writers, or entertainment prognosticators, weather forecasters rarely look back to discuss how accurate their previous guesses were. Commercial weather sites seem to change their predictions for your particular square on the ground several times a day, and in general we don’t seem to expect accuracy more than a couple of days into the future; sometimes less. At the same time, the accuracy of severe weather warnings covering the next couple of hours, received from those same forecasters, are absolutely critical, and save lives every year.

A rare exception to this paucity of accuracy reporting is a page on the U.S. Weather Service site that captures performance for temperature and precipitation prediction in detail. The trends in this figure, taken from that page, are repeated in almost every case: Forecasts have gotten better over the last 50 years, but always become less accurate the farther you look into the future.

Note that this figure only goes out 7 days, and until 1998, only out 5 days.

In Global Warming: The Complete Briefing, John Houghton presents data like those in this figure and substantiates both trends. He notes that the three-day forecasts at the time his book was published were as good as two-day forecasts 10 years before. Aligning this result with a similar graph on increases in computing power, he concludes that this one-day improvement in forecasts over 10 years coincided with a 100-fold increase in computing muscle.

Why does forecast accuracy fade so quickly the farther we look into the future?

Here is where we run into what some meteorologists call “the wall,” which can be summed up in a single word – chaos!

The word is derived from the ancient Greek meaning abyss or total void. Modern synonyms include disorder, confusion, mayhem, havoc, turmoil, and anarchy. You can follow the concept through the centuries in literature and religion, but let’s restrict it here to meteorology, where it has a very particular mathematical meaning.

Chaotic systems are those whose future state is extremely sensitive to initial conditions in part because the relationships among variables are non-linear and there are strong feedbacks among them. This means that a change in one part of the system causes an even larger change in another part, which then feeds back to affect the first part. So small errors in the definition of the initial state of the atmosphere at the beginning of a model run quickly multiply in terms of larger errors in prediction at the next time step.

There is no cure for this as we will never have a completely accurate measurement of the conditions in all of those 10 million boxes at any one time.

Increases in measurement density and computing power can reduce the rate at which the errors accumulate, but they will never approach zero.

But wait, is this conventional wisdom still true in the new information age where Artificial Intelligence (AI) seems to be creating new ways to analyze data and apply the results?

The forecast community was disrupted recently by a research project that brought the power of AI to bear on weather forecasting. I have been interested in going deeper into the meaning and impact of AI in general, and this report offered the opportunity to do that on a topic relevant to this Substack site.

Artificial Intelligence

So what is artificial intelligence?

While there has been a recent surge in interest in this technology, the phrase and the field of study have been around for nearly as long as there have been computers. The Wikipedia article on this topic cites 1956 as the year the term was first used.

From the beginning, the challenge was to devise computer programs that could mimic human intelligence. Boomers and Millennials may remember the program called Dr. Sbaitso from the 1990s that attempted to mimic a conversation with a psychologist or therapist. It was never very convincing!

That kind of language processing was a first area of concentration in AI, but both computer power and theory limited its usefulness, and AI went through a period known as the “AI Winter” from about 1974 to 2000, where research and application stagnated.

AI returned to public consciousness in 1997 when IBM’s Deep Blue system defeated world chess champion Garry Kasparov. Interest in the technology was enhanced in 2011 when a follow-on system, Watson, beat two human contestants on Jeopardy.

The recent boom in AI is often traced to the 2010s and the development of Deep Learning that could be applied to the incredible increase in the availability of information on all topics that can be gleaned from the Web. A similar explosion in computing speed and data storage capacity has led to a renaissance in the field.

AI systems now drive the most advanced web search routines and can scan texts, derive meaning from what is written (meaning as pattern of usage) and generate new summary text (this is called generative AI, like ChatGPT).

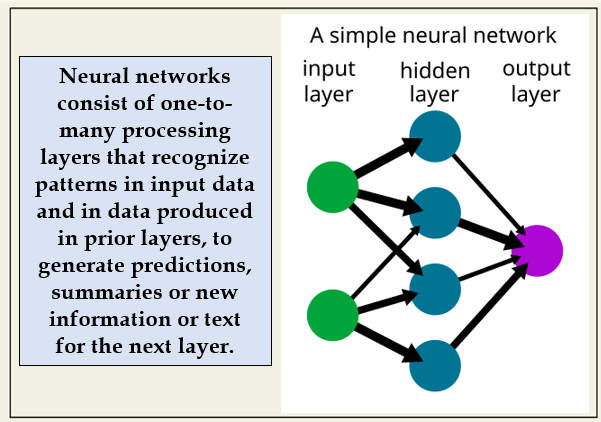

The mysterious component of all this for me is Deep Learning, a technology that employs neural networks to process and reprocess data in a recursive search for recognizable patterns. The term “neural network” is intentionally derived from, and seeks to emulate, how information is stored and processed in the human brain.

While Deep Learning is a rich and complex field, I find it useful to think of AI as a computationally-intensive method for scanning, linking and summarizing patterns in exceedingly large sets of information or data. The several layers in a neural network model refine those discovered relationships at each step, improving the accuracy of derived predictions.

A key here is that the method does not begin with, use, derive or make any reference to scientific formulas or theory! It really is just a method for extracting patterns from existing data.

Weather information is well-suited to this approach as standardized numbers are recorded consistently at frequent intervals at thousands of locations. Satellite sources infuse billions of bits of additional information into the data landscape on a daily basis.

AI and Weather Forecasting

That disruptive article on weather forecasting appeared in the premier science journal Nature and reported the results of the application of Machine Learning (ML) to producing those forecasts.

ML is a subset of artificial intelligence that focuses on the development of algorithms and statistical models that enable computers to learn from and make predictions or decisions based on data. This differs from Large Language Models (LLMs) like ChatGPT that are designed to understand, generate, and manipulate human language based on vast amounts of textual data.

If those last two sentences don’t sound like my writing, it is because both contain direct quotes from ChatGPT’s response to a request to define ML and LLM!

As part of the study reported in Nature, a software system called GenCast was applied to the prediction of spatial weather forecasts for up to 15 days into the future. The system was “trained” on 40 years of actual weather data up to 2018 and used what it “learned” to predict weather for the year 2019. GenCast predictions and those of the best conventional European system (produced by the ECMWF) were compared with measured weather data for 2019.

Results in the article are presented in a shroud of technical jargon, but the essence is simplified here.

GenCast does better than the most recent model from the European Center for Mid-Range Weather Forecasts (ECMWF), especially at low elevations over the first 3 days, perhaps the part of this diagram of most interest to most of us.

Remember that the GenCast system does not use atmospheric science theory or any standard equations – just recursive, “Deep” pattern recognition. And the GenCast predictions were generated with a fraction of the computational time and spatial resolution used by the best science-based models.

I wonder if we can read GenCast’s “mind” and learn anything from the patterns the program has discovered that allows these accurate forecasts.

Sadly, GenCast, while offering slightly improved forecasts, can’t defeat chaos. The accuracy of those forecasts still fades looking farther into the future.

But perhaps this study means that it might become faster and more accurate to go directly from measurements of surface and atmospheric conditions to detailed forecasts without the use of the very complex weather prediction models.

A recurring theme in these essays is the thanks we owe to the scientists and technicians who collect, curate and make available the huge trove of direct measurements of the state of the climate system on which all of our understanding and planning depends. We have just added another application that is dependent on this solid base of measurements.

An Appreciation For What We Can See

While dissecting small differences in the accuracy of mid-term weather forecasting, we might pause to consider what an amazing success story our current weather monitoring and prediction system is. What we receive on our screens, of any size and regardless of source, results from an astounding level of international collaboration and cooperation among researchers and technicians from around the world.

Andrew Blum captures this wonderfully in The Weather Machine with his descriptions of the open social and work environment he encountered at the European Center for Medium-Range Weather Forecasting (ECMWF). But supporting this dynamic center is a world-wide network of people assuring the continuity and accuracy of the systems that generate the basic data sets required to produce those predictions.

And the importance of accurate short-term forecasts, especially “now-casting” as it is called, focusing on the next several hours, cannot be over-emphasized. Being prepared for extreme events is crucial and is reflected in the constantly updated reports on the progress of storms and when and where we should be ready to take cover.

But the hard reality is that no matter how much short-term forecasts improve (or how they are generated), accuracy will still decline for both temperature and precipitation as we look farther into the future, with climatological averages becoming just as accurate as those modeled forecasts beyond a certain horizon.

For now, let’s be thankful that we can have some idea of what the weather/energy machine has in store for us for the next couple of days. Although I think the U.S. Weather Service has it right to limit their predictions of temperature and type of weather to seven days into the future, and precipitation amounts to three days, I am sure that I will keep looking at those ten-day forecasts on the commercial sites as well, just to see how much they change as the actual day approaches. Stay tuned!

Acknowledgement: Thanks to Patrick Aber for reviewing, commenting on, and improving the presentation of AI, ML and LLM!

Sources

Much of the material on chaos and weather prediction draws on a more in-depth presentation in chapter 4 of a book with the same title as this Substack site:

Aber, J. 2023. Less Heat, More Light: A Guided Tour of Weather, Climate, and Climate Change. Yale University Press.

Andrew Blum’s book is:

Blum, A. 2020. The Weather Machine: A Journey Inside the Forecast. Ecco Publishers

And John Houghton’s book is:

Houghton, J. 2015. Global Warming: The Complete Briefing. Cambridge University Press

The image of the structure of a weather prediction model was produced by NOAA and so is in the public domain. It can be found here:

https://en.wikipedia.org/wiki/Numerical_weather_prediction

The U.S. Weather Service page on prediction accuracy is under “verification” and is here:

http://www.wpc.ncep.noaa.gov/html/hpcverif.shtml#qpf

The article comparing the accuracy of GenCast and EDMWF forecasts was published as “open source” which allows modification and usage of figures from that article in this essay. The article is here:

https://www.nature.com/articles/s41586-024-08252-9

And a news article based on this paper is here:

https://www.nytimes.com/2024/12/04/science/google-ai-weather-forecast.html?smid=em-share

The neural network diagram has been released to the public domain from:

https://commons.wikimedia.org/wiki/ile:Neural_network_example.svg